Dimension-Independent MCMC Sampling Algorithms

Inspired by the recent development of pCN and other function-space MCMC samplers, and also the recent independent development of Riemann manifold methods and stochastic Newton methods, we propose a class of algorithms which combine the benefits of both, yielding various dimension-independent, likelihood-informed (DILI) sampling algorithms. These algorithms are very effective at obtaining minimally-correlated samples from very high-dimensional distributions.

Overview

Details

Motivation:

When cast in a Bayesian setting, the solution to an inverse problem is given as a distribution over the space where the quantity of interest lives. When the quantity of interest is in principle a field then the discretization is very high-dimensional. Formulating algorithms which are defined in the (infinite-dimensional) function space yields dimension-independent algorithms, which overcome the so-called curse of dimensionality. These algorithms are still often too expensive to implement in practice but can be effectively used offline and on toy-models in order to benchmark the ability of inexpensive approximate alternatives to quantify uncertainty in very high-dimensional problems. They are hence extremely important tools both to the theoretician and the practitioner.

Methodology:

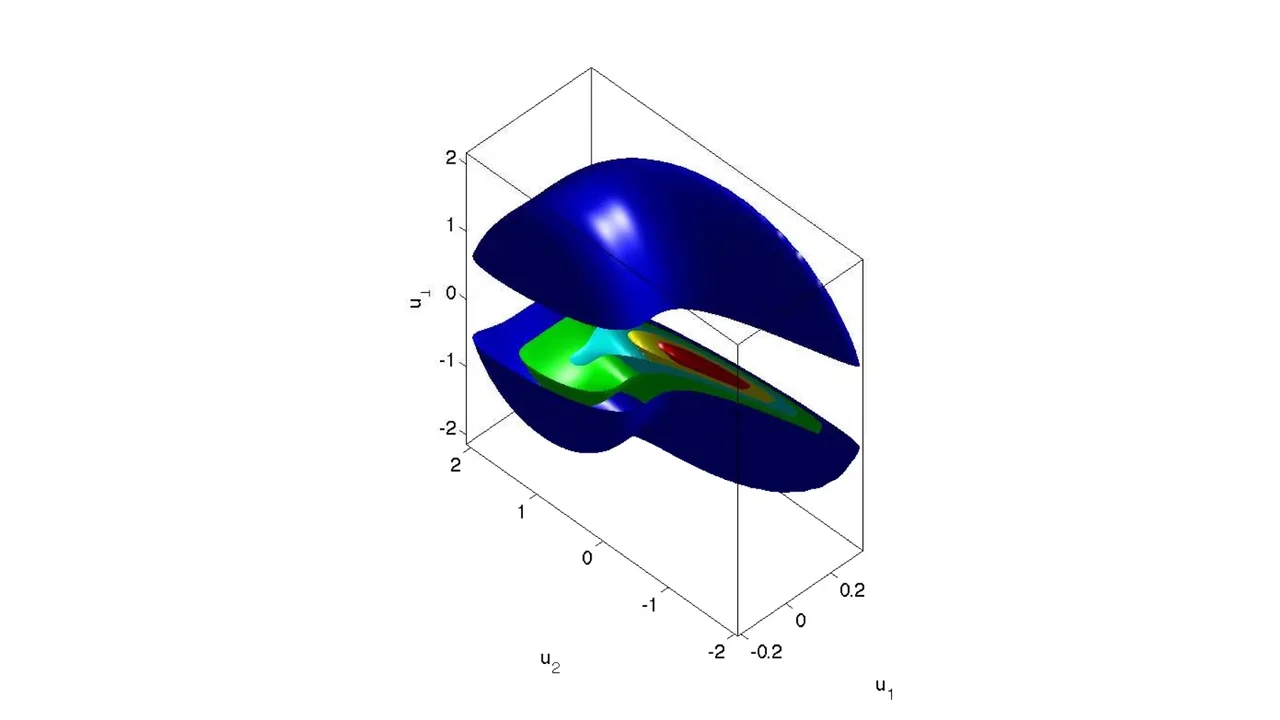

Inspired by the recent development of PCN and other function-space MCMC samplers, and also the recent independent development of Riemann manifold methods and stochastic Newton methods, we propose a class of algorithms which combine the benefits of both, yielding various dimension-independent, likelihood-informed (DILI) sampling algorithms. These algorithms are very effective at obtaining minimally-correlated samples from very high-dimensional distributions.

Outcomes:

A very preliminary version of such a sampler was introduced in [1]. See references therein for background on previous dimension-independent and likelihood-informed methods. Several more elaborate versions will be unveiled in the forthcoming [2]. This methodology has so far been successfully applied to examples related to numerical weather prediction, groundwater, conditioned diffusions, and subsurface reconstruction.

We are currently working on implementing these methods in more real-world applications including the above, as well as seismology, oceanography, finance, green wireless, clean combustion, and many more … In parallel, we are also extending these results to develop more effective samplers, and working on establishing rigorous theoretical convergence properties.

Collaborators

- Kody Law (KAUST)

- Raul Tempone (KAUST)

- Tiangang Cui (MIT)

- Youssef Marzouk (MIT)

- Andrew Stuart (Warwick)

- Marco Iglesias (Warwick)

Publications

- Law, KJH, Proposals which speed-up function-space MCMC. Journal of Computational and Applied Mathematics, 262, 127-138 (2014).

- Cui, T, Law, KJH, Marzouk, Y, Likelihood-informed sampling of Bayesian nonparametric inverse problems. In preparation.

- Law, KJH, Stuart, AM, Evaluating Data Assimilation Algorithms. Monthly Weather Review 140(2012), 3757-3782.

- Iglesias, MA, Law, KJH, Stuart, AM, Evaluation of Gaussian approximations for data assimilation in reservoir models Computational Geosciences, 17:5, 851-885 (2013).

- Cotter, SL, Roberts, GO, Stuart, AM, White, D, MCMC methods for functions: modifying old algorithms to make them faster (Statistical Science, To Appear).