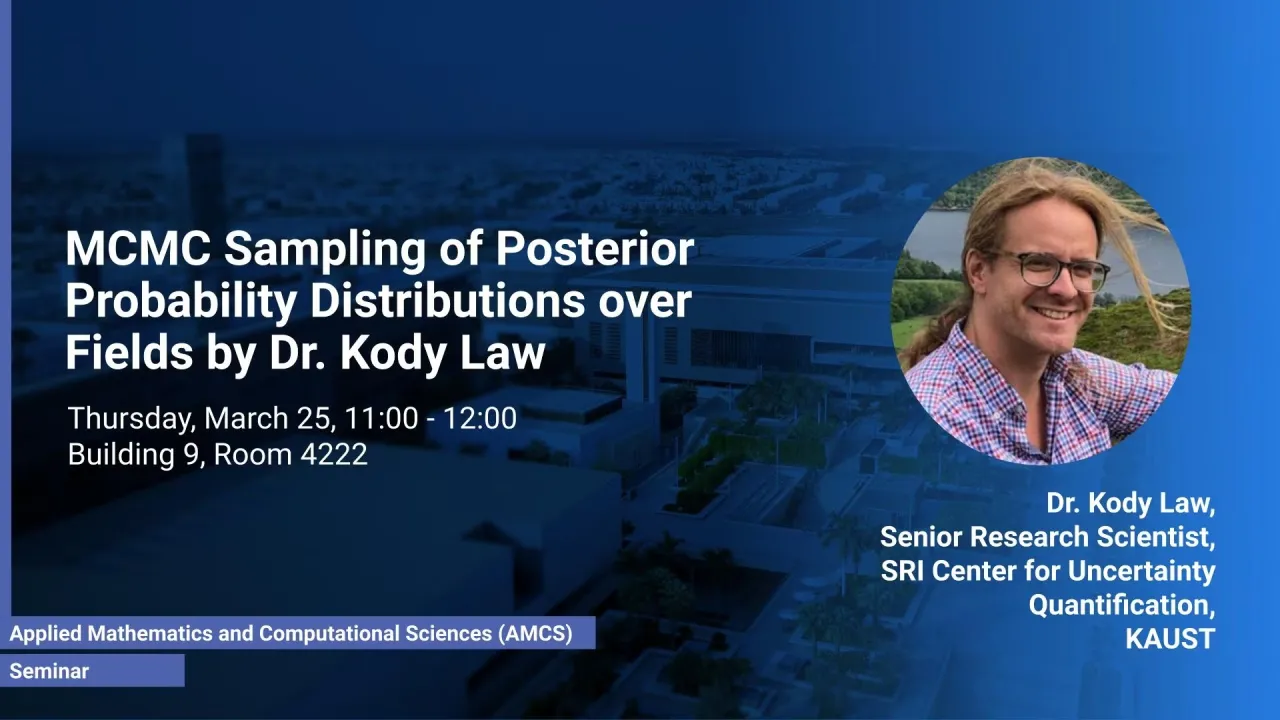

MCMC Sampling of Posterior Probability Distributions over Fields by Dr. Kody Law

In many problems in science and engineering, one would like to perform inference on parametric fields, of space and/or time, in order to reduce uncertainties and improve predictive capabilities, for example, the computation of quantities of interest such as outputs of the model or various expectations thereof. Examples of such fields include the initial condition of fluid dynamical equations in the context of numerical weather prediction and oceanography, the permeability and porosity of multiphase subsurface flow in the context of oil exploration, or the forcing of a stochastic dynamical system in the context of molecular dynamics.

Overview

Abstract

In many problems in science and engineering, one would like to perform inference on parametric fields, of space and/or time, in order to reduce uncertainties and improve predictive capabilities, for example, the computation of quantities of interest such as outputs of the model or various expectations thereof. Examples of such fields include the initial condition of fluid dynamical equations in the context of numerical weather prediction and oceanography, the permeability and porosity of multiphase subsurface flow in the context of oil exploration, or the forcing of a stochastic dynamical system in the context of molecular dynamics. These are problems of Big Data, and Big Models, and Prior knowledge. I will explain the fundamental ingredients of this, which include (i) prior simulation or forward uncertainty quantification, and (ii) the incorporation of data via Bayes' theorem, leading to posterior simulation.

There is no general method for direct posterior simulation and it is indeed a challenging problem. One solution is to use Markov chain Monte Carlo methods. In this case one constructs an ergodic Markov chain having the desired posterior distribution as its invariant distribution and then algorithmic evolution through this Markov chain leads to Monte Carlo samples from the posterior. We will discuss the most famous version, the Metropolis-Hastings algorithm, which takes as a design "parameter" a proposal Markov chain, and imposes an accept/reject step in order to correct the samples such that they are distributed correctly. The samples are correlated however and their quality depends on this correlation, which in turn depends on this proposal. In particular, the proposal has to be chosen carefully in order for the algorithm to be defined on function space. Once this is established, the algorithm is "dimension-independent", in the sense that its performance does not depend on the dimension of the discretization of the field. Within the class of proposals which preserve this property, those which incorporate information about the likelihood perform better. The resulting algorithms are referred to as dimension-independent likelihood-informed (DILI) samplers.

Brief Biography

Kody Law is a Senior Research Scientist in the SRI Center for Uncertainty Quantification at KAUST. He received his Ph.D. in Mathematics from the University of Massachusetts in 2010 and subsequently held a position as a postdoctoral research fellow at the Mathematics Institute of Warwick University until he joined KAUST in 2013. During his short academic career, he has given more than 50 invited lectures around the world and published 35 peer-reviewed journal articles and book chapters in the areas of computational applied mathematics, physics, and dynamical systems. His current research interests are focused on inverse uncertainty quantification in high dimensions, i.e. on large(target --> extreme) scale: data assimilation, filtering, and statistical inverse problems.